Tokenization

Tokenization is a data protection technique that replaces sensitive data with a surrogate value (token) that has no intrinsic meaning or value. The original data is stored separately and securely.

Tokenization is, along with encryption, one of the key techniques of data protection.

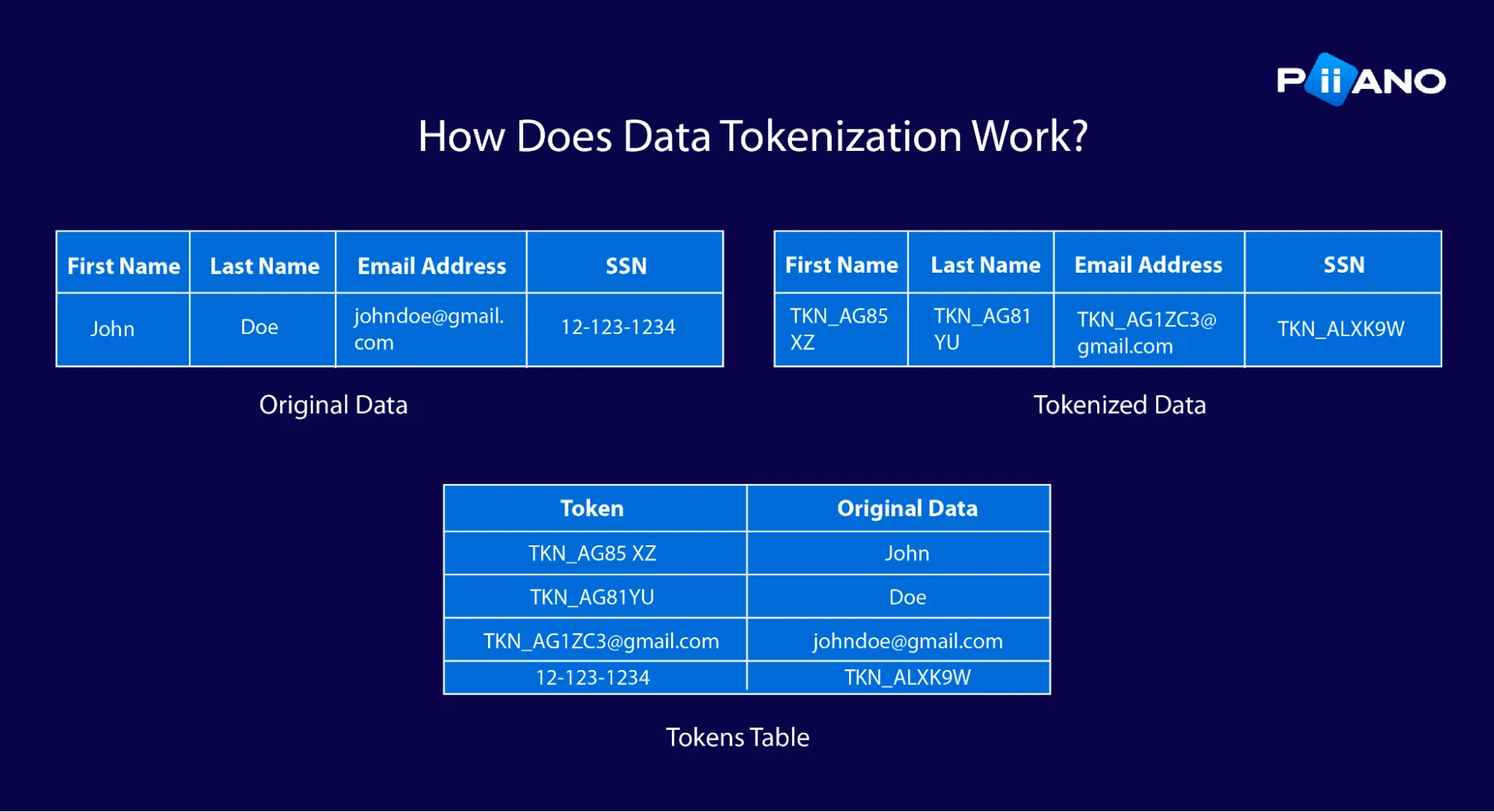

How does data tokenization work?

The tokenization process involves several steps:

- Data classification and identification. Identify sensitive data elements that require protection in a customer data record, e.g. credit card numbers, SSN, or PII.

- Token generation. Generate a non-sensitive token for each sensitive data element. Various algorithms cater to different needs, e.g. for ensuring that the same data is consistently mapped to the same token, or to preserve the format of the data.

- Token mapping. Store sensitive data and corresponding tokens in a key-value “tokens table”, replacing original data with tokens. This ensures the system is free of sensitive data.

- Data security. Protect the token table in a highly secure vault, typically featuring record-level encryption, granular access controls, comprehensive auditing, and more.

- Tokenized data usage. Use the tokens in daily operations. When needed, and with the right permissions, the tokenization engine performs a lookup on the tokens table to retrieve original data.

Benefits of tokenization

Data breaches are a constant threat. But what if stolen data was useless to attackers? Data tokenization can make that happen.

This process replaces sensitive information like credit card numbers with random tokens. These tokens hold no value on their own, minimizing damage from a breach.

Data tokenization helps mitigate the risks associated with storing sensitive information:

- Breaches cause less damage. Even if hackers access your systems, they’ll only find useless tokens, not real data. In order to gain access to the real data, they’d need to breach the token table in your data privacy vault, too. Since a vault is highly secure by design, the hackers are going to have a very hard time attacking it.

- Secure collaboration. When sensitive data is properly tokenized, it can be securely accessed by various stakeholders, such as data analysts and QA engineers, and all access is recorded to audit logs. This enables data democratization and collaboration without compromising data security.

- Secure data sharing across systems. You can move tokenized data between applications in your organization without compromising security. The original data remains secure while authorized users can access it through tokens.

- Granular access control. Tokenization allows you to set specific permissions and update them easily without affecting your applications.

- Simplified data management. Manage all your sensitive data centrally with tokens. Search, manage permissions, and delete tokens — all in one place.

Data tokenization strengthens security, eases regulatory compliance, and builds customer trust. It also enables data democratization by allowing secure access to pseudonymized data across various stakeholders, fostering innovation and collaboration. It’s a user-friendly solution that integrates seamlessly with various systems, making it a smart choice for businesses of all sizes.

Tokenization or encryption?

Encryption and tokenization are valuable tools for data protection, but they serve different purposes and have different strengths. They differ in operation, application, and system integration, catering to various use cases.

Encryption converts data into a coded format to prevent unauthorized access, which is reversible using specific decryption keys. Encryption is versatile and can be applied at different levels: from files to data traffic.

Opt for encryption when the agility of data access is crucial, and secure data transit is a necessity. It serves well in environments demanding quick decryption for authorized use while maintaining rigorous access control.

Tokenization shines for:

- Controlling access to stored data.

- Minimizing the scope of compliance requirements you need to adhere to, such as the PCI DSS requirements.

While encryption scrambles data using a mathematical algorithm and a key, tokenization replaces sensitive data with meaningless, non-exploitable tokens, keeping the original data encrypted in a secure and isolated vault. This approach removes the original sensitive data from conventional storage, minimizes the attack surface, streamlines access control, and significantly simplifies compliance with data protection regulations.

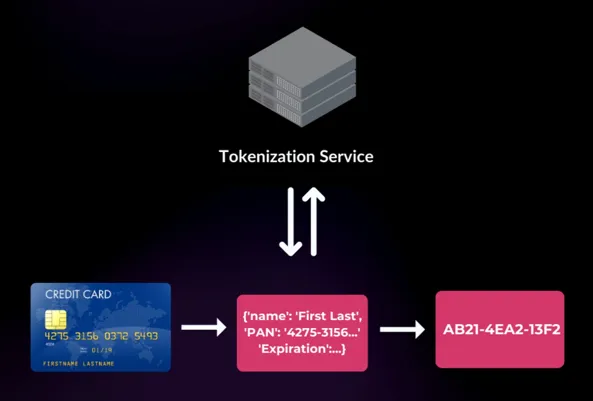

Tokenization works great for protecting data while supporting its usage, e.g. tokenizing a real credit card number to a random non-sensitive token that looks like a credit card number. The token has no relation to the original credit card number and is retrieved using a lookup table.

Even if a breach occurs, the stolen tokens are useless without access to the vault, rendering the data inaccessible and maintaining its confidentiality. This extra layer of security makes tokenization an especially powerful choice for safeguarding sensitive information.

Tokenization is ideal for reducing the compliance burden and safeguarding data-in-use, excelling in scenarios where sensitive data requires protection while still allowing reference to a securely stored source of truth.

- Breach protection. Tokens are inherently secure and carry no direct value if stolen.

- Reduced compliance scope. Tokenized data typically falls outside the strictest compliance measures required by regulations like GDPR, PCI DSS, and HIPAA.

- Flexibility. Tokens can be adapted to any data format, making them perfect for use in legacy systems.

- Storage efficiency. Tokens generally require less storage space than encrypted data.

- Single source of truth. Centralizing sensitive data in one tokenization system simplifies management.

- Data deletion. Supports compliance with the Right to be Forgotten (RTBF) by simplifying the deletion of personal information.

- Granular control. Tokens provide detailed access control settings, offering clear advantages over traditional encryption keys.

- Simplicity. Properly implemented tokenization systems are typically easier to manage than complex encryption frameworks.