Data anonymization

Data anonymization is a data deidentification technique that involves irreversibly transforming personal data so that it is unfeasible to identify an individual directly or indirectly. The purpose of anonymization is to protect privacy while still allowing the data to be used for analysis, research, visualization, or machine learning.

Some use cases for anonymized data include:

- Sharing medical records for research while protecting patient identities.

- Analyzing trends in consumer behavior without exposing personal data.

- Releasing demographic data as public datasets.

Anonymized data can be shared more freely within an organization since employees accessing it cannot identify the individuals it pertains to. Data anonymization can therefore promote a more open and collaborative data-sharing environment. For example, a large retail company that gathers customer transaction data can anonymize it, enabling teams such as marketing and inventory management to analyze the data without compromising individual customer privacy.

However, while ensuring maximum privacy, anonymized data loses much of its utility outside of analysis, research, and machine learning scenarios. For business use cases that require the ability to map transformed data back to a specific individual, pseudonymization should be the technique of choice.

Data anonymization techniques

Some common data anonymization techniques include:

- Data masking: obscuring specific parts of data, such as hiding every character in a name except for the initial, or replacing most digits in a credit card number with filler characters.

- Generalization: reducing the precision of data, such as changing a specific date of birth to just the year, or replacing the exact address “123 Main St” with the street name “Main St”.

- Bucketing: a specific type of generalization where individual data values are grouped into predefined ranges or “buckets” — for example, ages 21, 25, and 29 are all replaced with a bucket of “20–30”.

Data anonymization and data protection laws

Anonymized data, when processed to ensure irreversibility, falls outside the scope of GDPR and other data protection laws.

For example, according to GDPR’s Recital 26, the principles of data protection do not apply to anonymous information. GDPR is not concerned with the processing of such data, including for statistical or research purposes.

Similarly, anonymized data is exempt from the data protection rules outlined in CCPA, HIPAA, and Canada’s PIPEDA.

If a company can demonstrate that a data breach did not result in the loss of personal data, regulatory bodies typically disregard it, as anonymized data is not subject to these regulations.

Anonymization vs pseudonymization

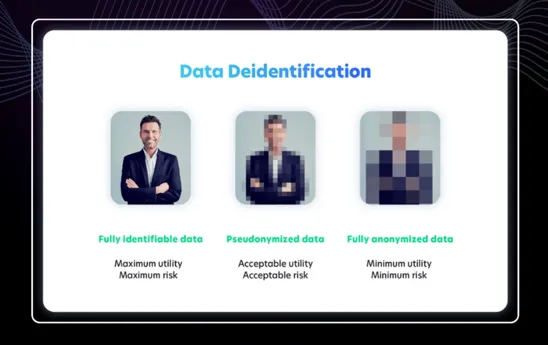

It’s important to note that anonymization is different from pseudonymization: the latter reduces the risk of identification but can be reversed, whereas anonymization is meant to be irreversible.

Pseudonymization involves replacing personal data with pseudonyms or tokens but maintains the connection with the original personal data in a secure way. Pseudonymized data cannot be linked to a person’s identity on its own. However, unlike anonymization, pseudonymized data can be re-identified using an additional piece of information that is kept secure elsewhere.

Pseudonymization is crucial in scenarios where identity recognition is needed without compromising privacy. Compared to anonymization, pseudonymization enables better data utility while keeping risk acceptable:

Pseudonymization is often implemented using a technique called tokenization.